| michael naimark |

|

|

|

IMU VR Camera |

|

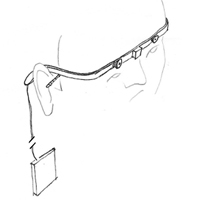

Michael Naimark Advisors: Naming Credit: The IMU VR camera is a wearable camera system consisting of two tiny wide-angle HD video cameras just above each eye, two binaural microphones near each ear, and an Inertial Measurement Unit (IMU) in the center. All electronics for recording or streaming are off-boarded in a small pocket box. The audiovisual imagery is primarily intended for experiencing in VR headsets. The visual imagery, which may have a maximum 180 degree field of view (FOV), moves around the 360 degree panoramic sphere based on the IMU orientation data. For example, if the camera wearer "pans left" 90 degrees, the VR headset viewer will see the image leave their viewing zone and, it is speculated, will naturally follow it and turn their head accordingly. One loosely-defined genre of video, cinema, and gaming may be called the "This is What I See" genre. In cinema, it's generally referred to as "First Person" or "Point of View" (POV) shots and is used more as cutaway imagery. In conventional narrative cinema, talent is almost always instructed not to look directly into the camera, creating the feeling that the camera is an invisible "fly on the wall". For example, in Francis Coppola's cameo appearance in Apocalypse Now, he's a television director screaming "Don't look at the camera" to the troops running by. On rare occasions, films are shot entirely in first-person perspective, for example, "Enter the Void" and "Lady in the Lake" (there's even a Wikipedia list). Narrators, such as news and sports reporters, usually look directly into the camera and many video games are from a first-person perspective. The proliferation of small wearable video cameras such as GoPro Heros have drastically increased the interest and applications for "helmet cams," and even smaller wearable cameras like in Google Glass have also generated increasing concern. It's well-known that projecting stereoscopic imagery in front of the screen is problematic. For one thing, when viewers sway their heads back and forth, even a little, the imagery sways with them rather than revealing the different viewpoints. But even when holding one's head perfectly still, near-field stereoscopic imagery on a screen many feet away taxes the basic psychophysics of convergence and accommodation. As such, most "3D movies" place the imagery behind the screen, like looking through a giant window, occasionally saving near-field imagery for dazzle such as flying bats, poking broomsticks, and gushing blood. Headset VR can display near-field imagery in an entirely more natural way, particularly from CGI-based 3D models. VR game designers and story tellers already know this, and some of the most interesting work being made today exploits this "1-10 foot" or "intimate media" zone. The look is very different from a conventional close-up shot in conventional 2D cinema. Those familiar with the enhanced audio presence of binaural over stereo may say that headset VR can produce equally intimate visual presence. The VR camera space has very quickly become booming, with cameras ranging from elegant spheres and cylinders the size of fruit to giant "pro-level" systems weighing hundred of pounds. Most all of them are capable of capturing 360 degrees around, and as such, require more than one camera (even "360 degree" fisheyes are hemispheric). Full 360 degree cameras fall into three categories: 2D, paired stereo, and unpaired stereo. None can record significant positional viewpoints, though one can imagine very large arrays of cameras offering limited position tracking. For a (now slightly dated) summary, please see VR Cinematography, a slideshow from September 2014. Ironically, none are capable of producing perfectly seamless, stereoscopic motion picture imagery in the 1-10 foot zone, where stereo is most important. The 2D cameras are not stereo, and though stereo may be simulated (for example, from nearby Lidar data, like Google Street View), the data is offset. Paired stereo cameras have discontinuities at the vertical seams, while unpaired stereo "spreads out" the artifacts more evenly. Computational approaches such as view interpolation and light fields may help (and fixing by hand may always be a credible but time-consuming option), but all such approaches, in the end, can never be perfect for every kind of scene. (A colleague once used looking through a keyhole as the perfect foil: when a camera array is placed close enough to a keyhole but with none of the cameras looking directly through, there is no data, period.) The IMU VR camera can produce seamless wide-angle ortho-stereo video, and equivalent binaural audio, in both the intimate near-field zone as well as the large-scale far-field zone, around the entire 360 degree panoramic sphere, but with a hitch: not all at once. The viewer must follow the imagery as it moves. If the camera has 180 degree FOV lenses and the VR headset has 100 degree FOV displays, then there will be some "wiggle room" before the viewer may need to pan or tilt their head. But short of placing the viewer's head in a robotic vice, the viewer will need to voluntarily move their head to follow the action, and if they look in the other direction, will see nothing. Will it work, in that "seeing nothing" will not be distracting and that following the action will feel easy and natural? By far, most all of the VR community is against anything short of "all-360, all-the-time". But then again, most all of the VR community are very new in the field, with little firsthand experience. We don't know. But we have seasoned hunches. Oh, did we also mention that the IMU VR camera can be made cheap, lightweight, and tiny; only uses two channels of video plus a small amount of additional data; and requires very little computational processing? And we're certainly not against using it as a component in larger, full 360, 3D models. We'd like to begin exploring three areas, or sub-genres or styles, artistically. We propose making several "Design Sketches" - more developed and finished than "demos" but more modest and frugal than "epics": 1) Interviews and Journalism - Interviews almost always take place within the "intimate zone," so we expect subjects to appear particularly present when shot right and viewed with VR headsets. And, most of the time, the interviewer is looking directly at the subject rather than looking around the room. Most interviews use a camera near but necessarily off-axis from the interviewer, and the offset eye contact of the subject has, to some, been a source of challenge from the earliest days of cinéma vérité to documentaries shot today. Our camera isn't perfect but with cameras an inch above each eye is less than the distance between each eye, so, just as we usually can't tell which eye someone is making eye contact with us, we think this will be negligible. Most importantly, we wonder whether the nature of the interview will change when the subject is truly looking at the interviewer. Of particular interest is what might be called "subjective journalism" where the camera wearer is roving around interacting with people. 2) Action Cams - One major new style of imagery to emerge with small cameras are first-person POV action sequences, for example, mountain bike runs. Since the focus and attention is forward facing, it's to-be-tested how much far-side and rear views will be missed. For example, most "dark rides" in theme parks, like Disney's "Pirates of the Carribbean" have giant clamshell-like occluders behind each seat preventing riders from seeing too much behind, and the cars occasionally pan left and right as part of the program. One interesting conesequence is that image stabilization is "free", transforming wobbly imagery into rock-steady imagery with a wobbly frame (which may itself be expressive). 3) Integration into 3D Models - A legitimate concern of the VR community using 3D models rather than cameras is that camera-based VR does not allow for position tracking, for free navigation, or for manipulation of the imagery (e.g., interacting with an avatar or blowing things up). The tradeoff between camera-based people wanting "realness" and model-based people wanting "interactivity", and how this roughly aligns with cinema versus games (and with Hollywood versus Silicon Valley) is not new and is well-known. We see our imagery as useful for hybrid solutions, particularly as seamlessly integrated components of the "One Earth Model". Our team is in place and we're beginning building a simple but clunky prototype. Provisional patent applications were filed in December 2014 and in May 2015, which we may or may not elect to pursue. (For more on this, please read "Open Patent Protection", a short essay which ranks #1 on such Google searches, and watch "I Used to Think Patents Were Cool", a 36 minute video.) Given financial support, we expect to show a proof of concept in two months and, given a green light, expect to have the three Design Sketches completed four months later. |

|

|

|

|