From VSMM98 Conference Proceedings

4th International Conference on Virtual Systems and Mulitmedia

Gifu, Japan

1998

Invited Paper

Field Cinematography Techniques for Virtual Reality

Applications

Michael NAIMARK

Interval Research Corporation, 1801-C Page Mill

Road , Palo Alto CA 94304 USA

Abstract. "Virtual Reality" (VR)

is practically defined by the requirement of 3D computer models

and realtime control by the user. While such properties afford interactive

navigation and manipulation, the imagery is relatively simplistic

or cartoon-like. Cinema is the opposite, with little or no interactivity

but with "photo-realistic" imagery. Most of the efforts around VR

have originated from the computer culture. This paper describes

complimentary efforts from the cinema culture, including techniques,

case studies, challenges, and social implications.

- Virtual Reality and Cinema

1. "Be Now Here" in Creative Time's "Art in the Anchorage" exhibition,

New York, 1997.

(photo: T. Westenberger)

1.1 Aesthetics of Telepresence

Much of the historical work in representation

has concentrated on conveying a sense of place. We can trace one

strand relating to visual representation from landscape and mural

paintings, to the panoramas and cycloramas of the nineteenth century,

to the special-venue cinema formats of today such as Imax, Showscan,

and CircleVision. These formats exploit high spatial and temporal

resolution, wide-angle and surround fields of view, multitrack audio,

and 3D stereoscopy. Their goal is to convey a sense of "being there"

– that is, of telepresence.

The "there" in most cinema is an actual physical

place, since the nature of cameras is to record whatever is in front

of the lens (even if that is the contrived environment of a studio).

The aesthetics of cinema are biased toward representations of actual

places; imaginary places must be created with additional work (i.e.,

special effects). Furthermore, virtually all cinema is linear and

non-interactive; the technology of film makes it difficult to give

the audience any control.

Hence, the aesthetics of telepresence from the

point of view of cinema are geared to present high sensory realism

in images of physical rather than imaginary places, with little

or no interactivity.

1.2. Cinema Culture and Computer Culture

The aesthetics surrounding the computer culture has traditionally

taken a contrasting point of view: low sensory realism in images

of imaginary rather than physical places, with interactivity as

a key element.

People often associate computer graphics with simplistic or cartoonlike

imagery. Developers must build computer-graphics models from scratch,

using drawing and painting tools as well as libraries of primitive

shapes and textures. Techniques such as photographic texture mapping

help such models to approach photorealism, but the imagery is still

far from conventional camera-based images alone. Current work in

image-based rendering shows promise but also demonstrates the complex

and problematic challenge of making 3D computer models from images.

Also, since such computer models must be built up from nothing,

it is generally easier to make imaginary and fantasy places than

to model physical environments. Hence, the aesthetics of the computer

culture has tended more toward the imaginary and fantasy, whereas

the cinema culture – particularly the documentary cinema culture

– has tended toward the actual world.

A more subtle difference between the computer and cinema cultures

is that a person must spend many hours in front of a workstation

to make computer models, whereas filmmakers must interact directly

with environments and people.

Many people who work with computers think that interactivity is

a critical characteristic, for both navigation and manipulation

(e.g., when the user specifies, "move that chair to the right").

Sensory and physical realism is secondary. People who work in cinema

often have the opposite priorities [1].

2. Cinematic Techniques for Interaction and

Immersion

2.1. Photorealism

Realness, or sensory realness, or

photorealism is relative to representation. In theory, we could

apply a photorealism Turing test to types of imagery: We would ask

viewers whether the representation is indistinguishable from its

subject. The problem is that virtually all current forms of visual

representation would fail. Even 3D Imax images are obviously still

only a movie, compared to reality. The human eye is extremely difficult

to fool. (The ear is much more fallible; we've all mistaken a voice

on the radio for the voice of a person present in the room.)

Consider the current range of photorealism for

dynamic visual representation: web- and MPEG- level video, broadcast-quality

video, theater-quality (e.g., 35mm) film, and special-venue (e.g.,

70mm and multiple-screen formats) film. Imax is advertised as having

10 times the resolution of standard 35mm film, and several special-venue

formats have twice the resolution of standard Imax [2]. To make

an ultimate CAVE, with four walls, ceiling, and floor all in Imax-quality

stereo, would require 12 times Imax resolution, or 120 times 35mm

film. Such a level of photorealism is orders of magnitude greater

than low-end formats.

It is also noteworthy that the degree of perceived

realness is usually correlated with quality of content. When a presentation

is compelling, it seems real. Conversely, higher resolution does

not automatically make a presentation more convincing. The relationship

between photorealism of form and quality of content is complex.

2.2 Panoramas

Panoramas are generally regarded as wide-field

images; often, they represent a complete 360-degree field of view

(FOV). A panorama represents a single point of view and is by definition

two dimensional. Panoramas allow a viewer to look around (angular

movement, i.e., panning and tilting), but not to move around (lateral

movement, i.e., dollying and tracking).

We can make panoramic photographs using a single

lens (such as a fisheye, in conjunction with a convex mirror, or

with a rotating slit mechanism). We can tile together multiple images,

but if they are not taken from a single point of view (i.e., the

nodal point of the camera), then distortion is inevitable.

Panoramic imagery offers limited navigational

interactivity. A viewer can pan and tilt through a panoramic scene,

but can neither move laterally nor manipulate the imagery.

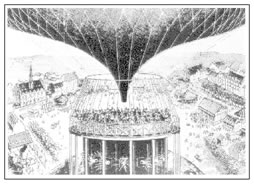

2. Le Cinéorama, a 10-screen panoramic film

theater by Raoul Grimoin-Sanson, Paris, 1900.

(gravure: La Nature)

2.3 Moviemaps

Moviemaps offer another kind of limited navigational

interactivity. They are filmed by stop-frame cameras that move along

a path and are triggered by distance from the viewed scene (typically

by a sensor attached to a wheel), rather than by time. Distance

triggering maintains constant speeds during playback at constant

frame rates, which is often not practical or possible during production

with conventional (time-triggered) movie cameras. The result is

the transfer of speed control from the producer to the viewer, who

controls the frame rate through an input device such as a joystick

or trackball.

In addition to speed control, limited control

of direction is possible if registered turns are filmed at intersections.

With match-cutting between a straight sequence and a turn sequence,

the user can "turn" from one route to another. The developer must

be careful to minimize visual discontinuities, such as sun position

and object (e.g., cars and people) transience. The goal is to make

the cuts appear seamless.

Moviemaps are "look-up" media, where all possible

views are pre-recorded and accessed via computer. Hence, they offer

only limited navigability: you can view only images that have been

pre-recorded (i.e., you cannot leave the paths). Like panoramas,

moviemaps limit interaction to navigation; viewers cannot manipulate

the imagery.

2.4 Stereoscopy and Multiple Perspectives

Stereoscopy, the sense of 3D that we get when

we perceive a scene through both eyes, requires two unique points

of view – one for each eye. People make stereoscopic photographs

and cinema using two lenses (and often two cameras) typically separated

by the normal human interocular distance. Two separate images must

be recorded and kept synchronized from recording to playback to

give the viewer the sense of 3D.

In theory, stereoscopy is successful only if the

viewer’s head is not allowed to move, because it represents

only two points of view. If head motion is allowed, every new perspective

encountered must be displayed. Although a system that can provide

all these views has been demonstrated in a limited way with pre-recorded

imagery [3], creating one is problematic because every possible

point of view cannot be filmed. Unlimited navigation is possible

with 3D computer models.

2.5 Orthoscopic Displays

Many VR displays combine three important sensory elements for

a maximum sense of presence: wide-angle FOV (for immersion), stereoscopy

(for 3D), and orthoscopy (for proper scale), often called wide-angle

ortho-stereo [4]. These displays fall into two main groups:

special-venue film formats (such as Imax Solido, 3D Imax, and Showscan

3D) and VR displays (such as head-mounted displays [HMDs] and CAVEs).

The film formats provide ultra-high resolution and group viewing

but are not interactive, whereas the HMDs and CAVEs are lower resolution

but allow the possibility of multiple perspectives through head-tracking

and 3D computer models.

3. Case Studies - "See Banff" and "Be Now

Here" [5]

3.1 See Banff

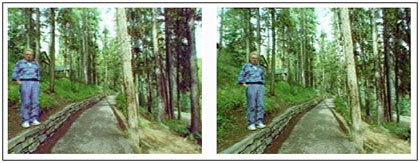

3. Johnston Canyon trail near Banff.

(stereo pair from See Banff - view cross-eyed)

See Banff! is a unique stereoscopic moviemap that

grew out of an exploration of field recording for VR [6]. We used

two stop-frame 16mm film cameras with wide-angle lenses, mounted

for stereoscopy on a "baby jogger" carriage, with an optical encoder

attached to one of the wheels to trigger the cameras at programmable

distance intervals.

The imagery was recorded entirely in the field,

outdoors in the Canadian Rocky Mountain region surrounding Banff,

Alberta. As is sometimes done in documentary film, we made no attempt

to control lighting or action: The goal was to record the

environment as it is.

In addition to recording the beauty of the landscape,

documenting the proliferation of tourists was an integral part of

the intention. As we worked in the field and interacted with both

local residents and tourists, it became apparent that there was

lively controversy surrounding tourism and growth; this dialog was

part of the experience of being in Banff. Aesthetically, conceptually,

and technically, having tourists appear in the foreground and the

landscape in the background added a strong sense of depth and presence.

Over 100 paths were recorded during a 6-week period.

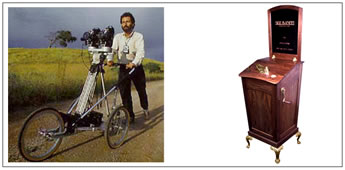

4. "See Banff!" camera rig and kinetoscope playback

system.

(photos: L. Psihoyos and M. Naimark)

The display system for See Banff! mimicked a 100-year-old

cinema viewing device: the kinetoscope. Thus, it mimicked the limitations

of the old stereoscopic viewing systems by using a stationary eye-hood

that prevents a viewer’s free head motion, as well as providing

nearly orthoscopic optics. The display also conformed to the limitations

of the one-dimensional travel along the paths by providing only

a one-dimensional user input device: a crank on the side of the

system. The crank employed a force-feedback brake that would freeze

at the beginning and end of each sequence. The user selects the

sequence to view by manipulating a lever near the eye-hood. The

prerecorded material was stored on a single laserdisc using field-sequential

stereo and LCD shutter optics.

Hence, the See Banff! kinetoscope provided a broadcast-quality

video wide-angle ortho-stereo viewing experience with one-dimensional

navigational control for a single user. It could not provide unlimited

navigation or any form of manipulation of the imagery.

3.2 Be Now Here

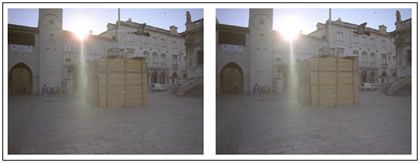

5. Orlando Column in Dubrovnik, covered for protection

from bombing.

(stereo pair from Be Now Here - view cross-eyed)

Be Now Here (Welcome to the Neighborhood) is a

unique stereoscopic panorama. We used two full-motion 35mm film

cameras mounted for stereoscopy on a motorized tripod that rotated

at 1 revolution per minute (rpm). To enhance the sense of telepresence,

we ran the cameras at 60 frames per second (fps), rather than the

standard 24 fps, and employed wide-angle (60-degree horizontal FOV)

optics.

The imagery was recorded in public plazas in the

four cities designated "In Danger" by the UNESCO World Heritage

Centre: Jerusalem, Dubrovnik (Croatia), Timbuktu (Mali), and Angkor

(Cambodia). The intention was to record these beautiful and

troubled environments.

The production concept was simple: Find in one

public plaza in each city a single spot that best represents each

place, then film several panoramas from that spot during the course

of the day without moving the camera system. The multiple times

of day would be perfectly registered and allow seamless intercutting,

with only the lighting and transient objects changing.

Due to the potentially hazardous and controversial

nature of the project, production was as inexpensive, fast, and

quiet as possible, relying on prearranged local staff at each site

and help from UNESCO to cross borders with the 500 pounds of film

gear. Through a great deal of planning and collaboration, all four

sites were filmed in 1 month. ("Highlights" included a bomb scare

in Jerusalem resulting in evacuation of the entire plaza, a drive

through a strip of Bosnia in the middle of the night during wartime,

a negotiation with Taurig camel drivers in Timbuktu about issues

of appropriation, and a bribe to get the hired driver out of jail

after he had gotten lost after dark and had been found by the Cambodian

military [7].) Miraculously, all the footage survived.

Working with local collaborators was a critical

element in ensuring the quality of imagery. The selection of the

sites was heavily informed by local knowledge. More important, filming

in the middle of public plazas is a conspicuous activity, and the

fact that local collaborators knew many of the people in the plaza

helped to make everyone feel comfortable. Local people, particularly

children, didn't appear self-conscious – they simply did what

they would normally do in such places.

6. "Be Now Here" camera rig and installation.

(photos: G. Tassé and C. Dohrmann)

The display system for Be Now Here employed a

large (12- by 16-foot) front-projection screen capable of maintaining

polarity, two video projectors driven by laserdisc players, four-channel

surround audio, and a simple input pedestal that allowed a user

to choose the location as well as the time of day. The input pedestal

was positioned at the orthoscopically correct point for a 60-degree

FOV of the screen.

We recreated the sense of camera rotation by rotating

the entire floor in sync with the imagery. A 16-foot diameter rotating

floor was used as the viewing platform, with the input pedestal

in the center. This space was totally dark except for the screen,

resulting in a strong visceral illusion: Viewers believed that the

screen was rotating around them, rather than that they themselves

were rotating. The effect is similar to the feeling of motion that

you get when you are sitting in a stationary train in the station

and an adjacent train begins to move.

After several public and private screenings, it

was apparent that the 1-rpm rotation of the floor was too fast for

some people to ignore. Tests suggested that, at 0.5 rpm, almost

nobody would feel dizzy, but the illusion of a rotating screen would

remain intact. The floor was slowed and the laserdiscs were remastered

at one-half the original speed (30 fps). An unintentional result

was that all motion in the images – of people, animals, and

vehicles – was now in "slow motion," making the representation

less real and more abstract, an effect which could be construed

as more arty and less techy [8]. Nevertheless, most viewers reported

a more compelling immersive experience.

Be Now Here provided a twice-broadcast-quality

video wide-angle ortho-stereo viewing experience with limited navigational

control (discrete choice of place and time) for group viewing. Like

See Banff!, it could not provide unlimited navigation or any form

of manipulation of the imagery.

4. The Challenge of Converging Cinema and Computing

4.1 Dimensionalization: Making 2D into 3D

Dimensionalization is making a 3D model

from one or more 2D images. Image-based rendering may fulfill

a common dream in many VR circles: to wave a camera around an actual

place and to end up with a 3D computer model. But, as we are learning,

many obstacles prevent us from realizing this fantasy.

Perhaps the most difficult problem is how to resolve

occlusions, the "holes" that are left after we after aggregate

all 2D images into a 3D model. Simply put: How do we fill in a blank

when we have no information? In many classes of imagery, occlusions

are inevitable, even with many 2D views, such as of forests, crowds,

street scenes, or almost any complex and unstructured environment.

Another question is for what purposes 3D models

are necessary. Several different 2D panoramic formats currently

exist on the web, including QuickTime VR, PhotoBubbles, and IPIX.

Since panoramas represent a view from only one point in space, they

are relatively easy to record. As 2D databases, they require much

less storage than a comparable 3D database. But they allow only

angular, rather than lateral, navigation, and they afford no manipulation.

For some applications, panoramas alone may provide sufficient virtual

reality.

4.2 Segmentation - Making Non-Semantic "Models"

into Semantic Models

Segmentation – adding higher-level

or semantic knowledge to an image – can be done by hand or

by computer. One particular class of 3D visual databases consists

of only points in space, and includes no semantic knowledge by the

system. This class includes light-fields and 3D images made with

depth-maps. Standard 3D computer models, built from primitives,

are semantic models: The system "knows" the contents of the database.

Semantic models are required for any kind of interactive manipulation

of the imagery. Light-field and depth-map 3D databases are nothing

but "clouds" of pixels (whether they are even "models" is debatable)

As such, they allow unlimited navigation but no manipulation.

Like full 3D models, semantic models may not be

necessary for all applications. Real-world precedences exist for

viewers to enjoy navigation without manipulation (through nature

trails, ancient ruins, religious temples, and so on).

4.3 Automation or Human Intervention

Making 2D into 3D (dimensionalization) and making

non-semantic into semantic models (segmentation) are both possible

when there are humans in the loop. Some of the processes have been

automated and some will be automated. But it's not clear that all

the decisions required should be automated.

Much of the human labor today associated with

dimensionalization and segmentation is by default: The work is neither

desirable nor enjoyable, but automation doesn't exist. The Hollywood

special-effects community relies on such human intervention. Clearly,

such processes would be best automated.

There is, however, another class of decisions

for which human intervention is desirable, particularly regarding

segmentation and semantic modeling. In some cases, determining what

are the "most important" elements in a scene is a matter of human

expression and of art.

4.4 Immersive Virtual Environments

The two most prominent immersive virtual environments

today are created with HMDs or with CAVEs. Both techniques are problematic.

HMDs are relatively low resolution and encumbering; CAVEs require

several projectors and space. Because of head-tracking, both HMDs

and CAVEs are optimized for only one user, even though CAVEs can

comfortably accommodate several other viewers.

Before we can have high-quality, ubiquitous immersive

virtual environments, we need to overcome several technological

hurdles. For example, we need high display resolution and brightness,

no viewer encumbrance, and accurate head tracking.

4.5 Cameras of the Future

Although we may be able to do a limited amount

of VR conversion from pre-existing images, cameras used for most

VR applications today fall into two categories: (1) standard, mass-produced

cameras that have been modified, specially mounted, or instrumented;

or (2) extremely heavy, expensive contraptions, such as CyberScans,

motion-control rigs, or time-of-flight lasers.

No one has designed an inexpensive camera specifically

for VR applications. A golden opportunity exists here.

4. Work in the Real World

The state of the world is precariously uneven in terms of resources.

Although many may believe that computers will save the world, North

American scientists have access to almost 40% of the world's R&D

investment, while the entire continent of Africa only has 0.5% [9],

and less than 10% of the children of the world will have access

to computers and the Internet by the year 2000 [10].

The state of the world is also unimaginably rich in terms of culture.

VR can be an important communications medium for world culture,

but only if those of us lucky enough to have access to the tools

are sensitive enough to work with and learn from local expertise.

If not, the loss will ultimately be ours.

References

[1] M. Naimark, Realness and Interactivity. In: B. Laurel

(ed.), The Art of Human Computer Interface Design. ISBN 0-201-51797-3.

Addison Wesley, Reading, MA, 1990, pp. 455-459.

[2] M. Naimark, Expo '92 Seville, Presence vol. 1

no. 3 (1992) 364-369.

[3] S. S. Fisher, Viewpoint Dependent Imaging: An Interactive

Stereoscopic Display, SPIE vol. 367 (1982) 41-45.

[4] E. M. Howlett, Wide-Angle Orthostereo, SPIE vol.

1256 (1990) 210-223.

[5] M. Naimark, A 3D Moviemap and a 3D Panorama, SPIE

vol. 3012 (1997) 297-305. (online at www.interval.com)

[6] M. Naimark, Field Recording Studies. In: M. A. Moser

and D. MacLeod (eds.), Immersed in Technology. ISBN 0-262-13314-8.

MIT Press, Cambridge, MA, 1996, pp. 299-302. (online at www.interval.com)

[7] M. Naimark, Trip Reports from the Be Now Here production.

(see http://www.naimark.net/writing/trips/bnhtrip.html)

[8] M. Naimark, What’s Wrong with this Picture? Presence and

Abstraction in the Age of Cyberspace. In: Roy Ascot (ed.), Consciousness

Reframed: Art and Consciousness in the Post-biological Era. ISBN:

1 899274 03 0. University of Wales College, Newport, 1997. (online

at www.interval.com)

[9] F. Mayor, Science and Power: A New Commitment for the 21st

Century, UNESCO Director General's address to the Association

for the Advancement of Science, Washington, D.C., 25 June 998

(see www.unesco.org).

[10] N. Negroponte, 2b1 Foundation Mission Statement (see

http://www.2b1.org/mission.html).

|